Why AI Safety Prompts Aren’t Enough: Understanding the Risks Behind User Click-Through Warnings

AI tools from major tech companies often rely on pop-up warnings and permission prompts to keep users safe. But experts argue that these security barriers can fail because many people either don’t understand the risks or simply click “yes” without thinking. Recent “ClickFix” attacks show how easily users can be manipulated into taking harmful actions, especially when they’re stressed or unaware of danger. Critics say these warnings shift responsibility away from companies and onto users instead of solving deeper problems like prompt injection or hallucinations. With AI becoming a default part of everyday products from Microsoft, Apple, Google, and Meta, understanding these risks—and the limits of current protections—has never been more important.AI safety prompts are meant to protect users, but experts warn they often fail because people quickly click through warnings without understanding the risks. This article explains why permission pop-ups don’t provide real security, how “ClickFix” attacks exploit user habits, and why critics believe tech companies are shifting liability to users instead of fixing core AI flaws like prompt injection and hallucinations. As AI becomes deeply integrated into Microsoft, Apple, Google, and Meta products, it’s essential to understand the limitations of these safeguards and what it means for everyday users.

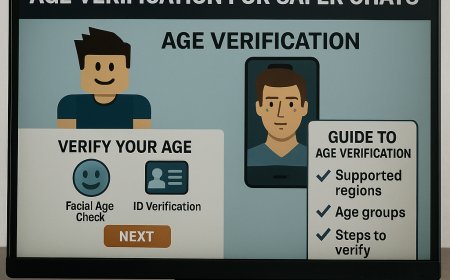

AI is being integrated into everything from our phones and browsers to our operating systems but the safety measures put in place to protect users are as far from perfect. A major concern is the heavy reliance on warning windows and permission prompts that ask users to confirm potentially risky actions, these prompts look like strong security protections on the surface, experts say they often fail because they depend on users making smartly decisions every time.

Security researcher Earlence Fernandes from the University of California, San Diego, summarized this problem clearly.. Users might not fully understand what they are approving, or they may get so used to seeing pop-ups that they automatically click “yes.” When that happens, the boundary meant to keep safe essentially disappears.

This concern has grown after a wave of “ClickFix” attacks—scams that trick people into following dangerous instructions. Even tech-savy users have fallen for them but everyday users are especially vulnerable. Some may be tired, overwhelmed, or stressed and accidentally approve something harmful. Others simply don’t have the background knowledge to recognize what is the dangerous. These situations are not about carelessness they are often inevitable human reactions.

many experienced users blame victims for falling for these scams. They argue that the people should know better. But critics insist this is unfair and overlooks the real issue, humans are not perfect. Security design that expects constant vigilance is flawed from the start.

This brings us to Microsoft’s most recent warnings about AI features. According to critics, these warnings feel more like legal protection for the company rather than meaningful user safety. Reed Mideke, one vocal critic, argued that Microsoft and other AI developers still have no real solution for problems like prompt injection or hallucinations. Because they haven’t solved these core issues, he says, they shift responsibility onto users by telling them to double-check everything.

It’s similar to chatbots that come with disclaimers saying users should verify any important information. But, as Mideke pointed out, people turn to chatbots precisely because they need answers. Telling them to verify everything makes the tool far less useful and places the burden back on the user.

His criticism doesn’t apply to Microsoft alone. Companies like Apple, Google, and Meta are rapidly integrating AI into their products as well. In many cases, these AI features start out optional. This increases the importance of strong, reliable safety systems—and highlights the weaknesses in the current approach.

The common theme across these concerns is that relying on user behavior is not enough. People cannot be expected to understand every technical risk or navigate perfectly. Good security design should assume that users make mistakes and should protect them even when they do.

Pop-up warnings and approval prompts can play a role, but they cannot be the primary line of defense. Real solutions must involve building AI systems that are resilient by designess vulnerable to manipulation, harder to exploit, and more transparent in their behavior. As long as companies depend on user approval as the main safety mechanism, the risks will continue.

As AI becomes more deeply woven into everyday technology, clear communication, stronger protections, and better system design are essential. Users should not bear the burden of navigating complex risks alone. Tech companies must take responsibility for ensuring that AI is safe, reliable, and truly built to support thepeople who use it.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0